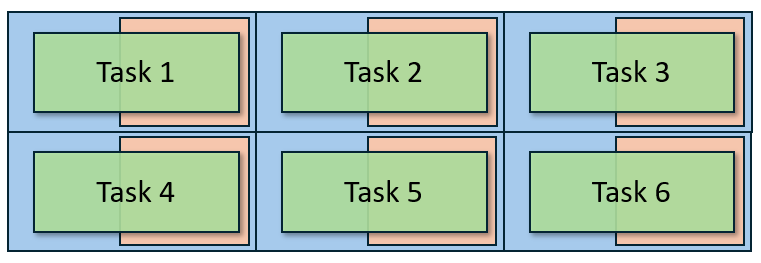

Some computing jobs are executed in “embarrassingly parallel”, where many processes are completely independent of each other. An embarrassingly parallel task will have a workload like this:

Every task uses a single core, and memory is not shared amongst the tasks.

Parallelism with “srun –exclusive”

For example, you want to observe the output of a function over a wide range of input parameters. This can be accomplished by executing the function in serial for every parameter of interest; however, if the function is computationally expensive to evaluate, this paradigm may be prohibitively slow since each evaluation of the function is completely independent. Fortunately, you can perform the same job in parallel, passing a parameter as a command line argument with multiple calls of srun. That is, you can execute multiple non-communicating processes within a single slurm batch script. Consider the following simple Python program:

$ square_number.py

import sys

if __name__ == "main":

args = sys.argv[1]

x = float(args)

print(f"x = {x}, x^2 = {x*x}")

We can execute the code with the following batch script:

#!/bin/bash

#SBATCH --job-name=parallel_job_test

#SBATCH --partition=short

#SBATCH --output=slurm_%x_%j.out

#SBATCH --error=slurm_%x_%j.err

#SBATCH --time=00:10:00

#SBATCH --ntasks=100

#SBATCH --mem=1gb

cd (path to working directory)

for i in {1..100}; do

srun --exclusive --nodes=1 --ntasks=1 --cpus-per-task=1 --cpu-bind=none python square_number.py $i &

done

wait

This script executes square_number.py for every integer in the range 1-100 by scheduling each execution with srun. Note that this time, we have requested 10 tasks with #SBATCH –ntasks=10. Let’s take a closer look at the srun command:

srun --exclusive --nodes=1 --ntasks=1 --cpus-per-task=1 --cpu-bind=none python square_number.py $i &

Each call to srun allocates a single node, a single process, and a single CPU core. We have also provided the –exclusive flag, which crucially tells Slurm to launch each process only when an allocated CPU is available. The job will not execute correctly without this flag. Finally, we have added an ampersand (&) to the end of the line, which indicates to bash that the execution of the script should not halt until this line completes. Otherwise, the script will execute each call in serial.