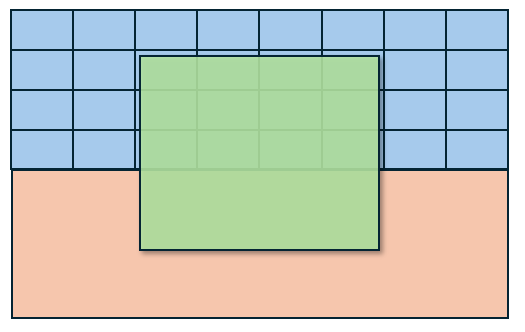

If parallel processes need to share access to resources, then you may need to write your code to execute across multiple threads within a single task. In this case, multiple threads have access to the same shared memory pool:

This can be accomplished in many different ways and generally depends on the programming language. As an example, consider the following simple C program which uses OpenMP to multiply every number in an array by a fixed constant provided as a command line argument:

$ test.c

#include <stdio.h>

#include <stdlib.h>

#include <omp.h>

int f(int x, int c) {

return x * c;

}

int main(int argc, char *argv[]) {

int array[6] = {0, 1, 2, 3, 4, 5};

int results[6];

int c = atoi(argv[1]);

#pragma omp parallel for

for (int i = 0; i < 6; i++) {

results[i] = f(array[i], c);

}

for (int i = 0; i < 6; i++) {

printf("f(%d, 2) = %d\n", array[i], results[i]);

}

}

The code can be executed by the following batch script:

#!/bin/bash

#SBATCH --job-name=openmp_test

#SBATCH --partition=short

#SBATCH --time=00:10:00

#SBATCH --output=slurm_%x_%j.out

#SBATCH --error=slurm_%x_%j.err

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=6

#SBATCH --mem-per-cpu=500mb

cd (path to working directory)

gcc -fopenmp test.c -o test

./test 2

The script will compile the program with OpenMP enabled, and then execute it as a single process with 6 threads. Each thread has access to the results array, and deposits the result of computing the function f into it. Note that the environment variable OMP_NUM_THREADS, which determines the number of threads accessible to OpenMP, is automatically set equal to SLURM_CPUS_PER_TASK.

A single node on Andromeda is equipped with a maximum of 64 CPU cores and 250GB of memory. Since multithreading is generally much easier to accomplish than multiprocessing with communication (i.e. with OpenMPI; see below), it is very common to request an entire node and use all 60 CPU cores available (note that 4 CPU cores are reserved for the Weka filesystem).

As an example, we can sum a very large array by splitting it into 64 chunks and performing the sum on each chunk in a separate thread with the following code:

$ bigtest.c

#include <stdio.h>

#include <omp.h>

#define ARRAY_SIZE 1000000

int main() {

int array[ARRAY_SIZE];

// Initialize array with values

for (int i = 0; i<ARRAY_SIZE; i++) {

array[i] = i + 1;

}

long long sum = 0;

#pragma omp parallel

{

// Each thread executes this block of code

int thread_id = omp_get_thread_num();

int chunk_size = ARRAY_SIZE / omp_get_num_threads();

int start = thread_id * chunk_size;

int end = (thread_id == omp_get_num_threads() - 1) ?

ARRAY_SIZE : (thread_id + 1) * chunk_size;

long long local_sum = 0;

// Each thread sums a portion of the array

for (int i = start; i < end; i++) {

local_sum += array[i];

}

// Define a 'critical' section, which will only be executed on a single thread at a time,

// preventing multiple concurrent accesses to the same variable

#pragma omp critical

{

sum += local_sum;

printf("Thread %d contributed %lld to the total sum.\n", thread_id, local_sum);

}

}

// Should output the sum of integers between 1 and ARRAY_SIZE + 1

// = ARRAY_SIZE * (ARRAY_SIZE + 1) / 2 = 500000500000

printf("Sum of elements: %lld\n", sum);

return 0;

}

And a corresponding batch script:

$ bigtest.sl

#!/bin/bash

#SBATCH --job-name=big_openmp_test

#SBATCH --time=00:10:00#SBATCH --partition-short

#SBATCH --output=slurm_%x_%j.out

#SBATCH --error=slurm_%x_%j.err

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=60

#SBATCH --mem=250GB

cd (path to working directory)

gcc -fopenmp bigtest.c -o test

./test 2