The NVIDIA System Management Interface ‘nvidia-smi’ is utilized for monitoring the performance of NVIDIA GPU devices. It shows us GPU usage. Users can get the list of the four GPUs and their UUIDs as below:

[johnchris@g006 ~]$ nvidia-smi --list-gpus

GPU 0: NVIDIA A100-PCIE-40GB (UUID: GPU-fe6043fd-a319-0ade-3d9d-9dd27b2a1442)

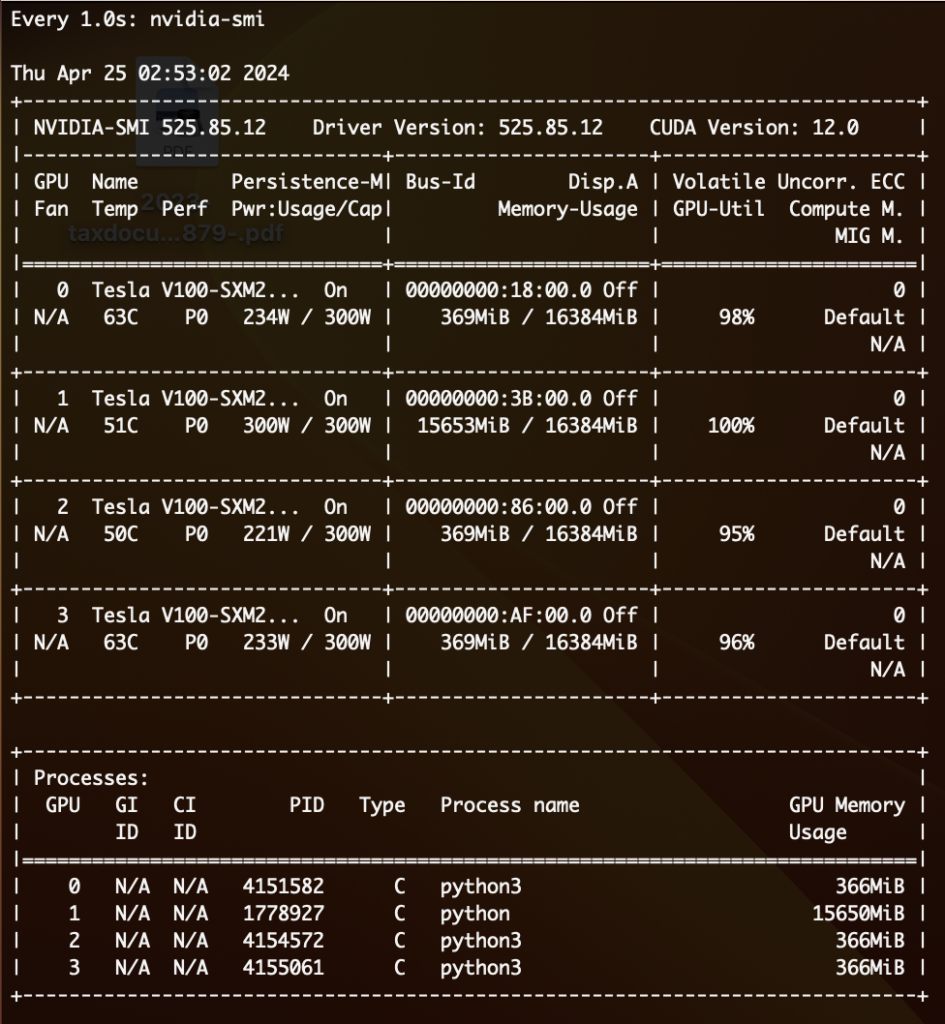

The command ‘watch -n 1 nvidia-smi’ re-runs GPU usage checks every second. Users can SSH to the GPU nodes if they are running a job on a GPU node. The image below shows a g009 node with 4 Tesla V100 GPUs installed, each with 16GB (total 64G) of GPU memory. Currently, they are running at about 25% capacity (16748 MiB/65356 MiB, which is 16.35GB/64GB), with memory usage and GPU utilization (available compute) at 98%, 100%, 95%, and 96%, respectively.

For all the details on this command see the manual pages: man nvidia-smi

Note: If the utilization is unexpectedly 0%, the user will need to determine why the job is not utilizing the GPU.